This is Part 1 of a 2 Part series exploring attention as infrastructure and a main source of value creation across politics, markets, and the economy.

What Trump, Mamdani, and Cluely Reveal About the Attention Economy

Trump bombed Iran over the weekend and announced it via Truth Social. Maybe not the first time posting became policy, but it’s hard to find a clearer example. I was in the middle of the grocery store grabbing bananas when I got the notification, which just underscored how strange and disorienting this all is.

Yesterday, Zohran Mamdani won the NYC Democratic Mayoral Primary, a highly contested race that he ran with sheer narrative discipline and command of the digital. Even if you don’t like his policies (I think many of them are unworkable) the campaign was good! He walked the entire length of Manhattan. He was everywhere, all the time.

And finally, a few days before both of those, a startup called Cluely1 raised a $15 million round led by a16z2. The company’s modus operandi is ‘cheat on everything’. Like sure, of course. But a16z didn’t invest based on that! They invested based on Cluely’s attention capture capability! Cluely is good at getting people to pay attention to them and is largely copying the enterprise Jake Paul playbook (stunts, virality, nihilism, and a vibe-first narrative) for B2C AI apps. We’ve already seen “brain-rot marketing” take over culture so it was only a matter of time before it hit the startup world.

But these three things - geopolitical conflict, a mayoral primary, a startup raise - are indicators of something I want to tie a thread through in this piece:

Attention is infrastructure: Determines what gets funded, elected, or build

Narrative is capital: It drives flows of money, policy, and sentiment.

Speculation is the operating layer between the two - it’s how belief is tested, priced, and converted into outcomes before institutions act.

What we’re seeing isn’t just a media trend. It’s a shift in the architecture of power. Attention → Speculation → Allocation. This is the new supply chain.

Traditional economic theory assumes information flows serve resource allocation. But increasingly, resource allocation serves attention flows. We've moved from an economy where attention supports other forms of value creation to one where attention is the value creation.

How to Think About Attention

Traditional economic substrates are land, labor, capital - bedrock inputs to make stuff. But now, the foundational input is attention.

Trump said he was going to take 2 weeks or so to think it through the Iran bombings… and then just did it? The NYT later reported that the decision to strike was influenced in part by how the Israeli campaign was “playing” on Fox News, so Trump’s response very much felt like a reactive spectacle3 rather than military strategy. And it played like theater!

Iran knew Trump might do something because of his incessant posts.

Military officials feared that Trump’s posts were compromising operational secrecy, so they ordered a diversionary B-2 flight to distract from the real mission.

Iran simply moved their uranium to a different spot, and now we don’t seem to know where it is.

It seems like the bombs didn’t really hit what they were supposed to?

Iran retaliated a few days later by striking US Gulf bases signaling to the US ahead of time that the bases would be hit (I was also in the grocery store when I found out about this one)

The fact that they didn’t close down the Strait of Hormuz is a sign that things might (?) be petering out from here (I write tentatively on the morning of June 25).

Trump called for a ceasefire, and both Israel and Iran seem to be ignoring him

He said China can continue buying Iranian oil, apparently lifting the sanctions?

He got very mad (understandably) on Live TV

Trump only notified Republican members of Congress about the strikes, which isn’t great. It also seems that intelligence briefings said that Iran wasn’t actively weaponizing its nuclear program (making the bombing illegal). But, when Congress is as useful as a fish flopping next to a giant body of water, the law matters less than content strategy.

Trump yet again inverted system architecture - flipping how information and decision-making traditionally flow. Military strategy and diplomacy all became subordinate to social media dynamics. But he is moving on as if war is only a weekend thing. As his State Department spokeswoman said:

I’m not going to get ahead of the president or try to guess what his strategy will be. Things happen quickly and I think we’ll find out sooner than later.

And this gets into the tricky part of the attention economy - it’s easy to get eyeballs, sure, and people will do wilder and wilder stunts to keep eyes on them. But what happens when people stop paying attention? Here, it’s that Iran will likely continue pursuing a nuclear bomb because all they have to do to understand the narrative is watch the feed, and that holds heavy, heavy consequences.

Zohran Mamdani

There are many great essays on Mamdani (Derek Thompson just joined Substack!), so I won’t belabor it too much here. But yesterday, Zohran Mamdani won the NYC Democratic Mayoral Primary. A 33-year-old democratic socialist just beat a former governor - Andrew Cuomo - by running a campaign that got a lot of attention. 4.5 months ago he was polling at 1%!

Most of his messaging was about affordability (which is also why Trump won among young voters). He is a master of short-form video and podcasts, but he was also physically in NYC, again, walking the length of the city. Cuomo was advertising on TV and raised $25 million in the largest super PAC ever created in a NYC mayoral campaign (!) but that simply didn’t matter

And as many have said, Mamdani is the most Trump-like candidate *in messaging* the left has produced - he had a deeply online campaign, he did every interview he could, he has a loyal base (they knocked on 1.5 million doors!), and clear messaging.

Mamdani proved attention is the path to institutional override. Just like Trump, but from the other side.

The shift isn’t that surprising, and it will happen more now, on both ends. People are largely frustrated with the status quo. There is excitement in rule bending, like JD Vance sticking up a middle finger or Trump dropping an F-bomb on C-Span.

The ideas matter, sure. People voted for Trump because they wanted deportations and unwokeness but also because he was different and new. Mamdani promises affordability and fresh new ideas. It feels like change in a world where people are not listened to.4

How They All Tie Together

All three stories - Trump bombing Iran on social, Mamdani walking Manhattan on TikTok, Cluely’s raise - have one thing in common: the power came from attention, and attention came from narrative discipline.

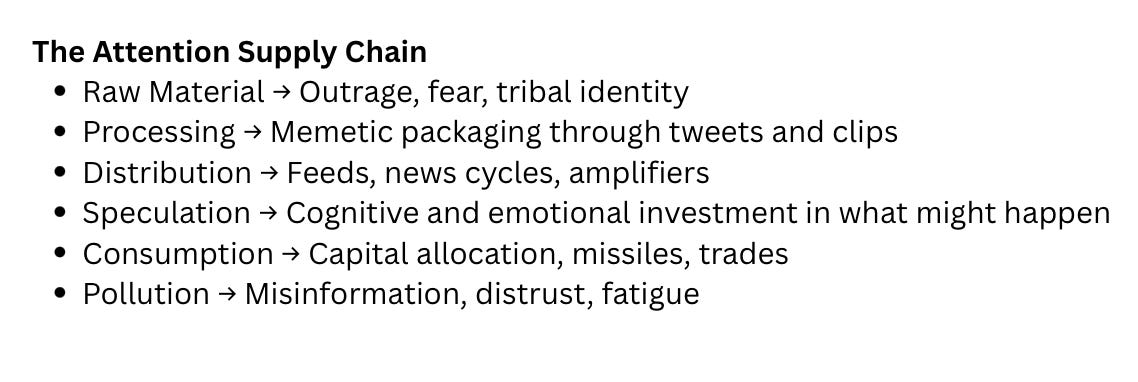

It operates like a supply chain of sorts:

You can trace this pipeline through of our examples.

Trump's Iran strikes:

Raw Material: Geopolitical grievance, national pride, fear of weakness.

Processing: Posts cryptic threats, shares Fox News segments, teases retaliation

Distribution: Amplified by cable news, echo chambers

Speculation: People begin trading not on strategy but on vibes. Prediction markets react.

Consumption: He bombs Iran.

Pollution: Strategic incoherence, legal ambiguity, normalization of feed-driven warfare.

Mamdani's campaign:

Raw Material: Affordability crisis, wealth inequality, housing despair.

Processing: TikToks, interviews, walking Manhattan,

Distribution: Lefty podcasts, shortform clips,

Speculation: Can he actually win? Would free buses work? What if NYC really went full socialist? Young voters, disillusioned moderates, and donors place bets in energy, time, and belief (and in prediction markets5)

Consumption: He wins the primary. Cuomo concedes.

Pollution: Outrage cycles, factionalism, increased ideological sorting.

Cluely fits this cycle too. When a16z funds Cluely based purely on attention capture capability, they're legitimizing attention as a fundable asset class. That sends a signal to every other founder - build for virality, not just utility. It’s American Dynamism!

Attention is the raw material of economic, political, and military action. But speculation is what operationalizes that attention: the bets people place (emotionally, politically, financially) on what narrative will become real.

And in politics, this type of speculation has become the closest thing to agency for people who feel like they are no longer served by the economy. People speculate on ideas, personalities, etc. Why wouldn’t they? But when this happens, you end up with a system optimized for speed and virality rather than stability or accuracy.

And I think that’s why there’s an informal coalition that controls resource allocation now - podcasters like Joe Rogan, YouTubers like Mr Beast, guys like Steve Bannon and Tucker Carlson. Elon Musk, who has not said one word about what's going on, is a narrative thermostat. They are masters of speed and virality.

They all take that speculation, determine what gets attention, which increasingly determines what gets resources. The world is learning from them now - startups, politicians, geopolitical strategy. The "checks and balances" no longer come from Congress or the courts, but from the feed.

We’ve Been Here Before

What I'm describing isn't entirely unprecedented, of course. Many have discussed6. Back in 1971, Herbert Simon wrote in Designing Organizations for an Information-Rich World:

In an information-rich world, the wealth of information means a dearth of something else: a scarcity of whatever it is that information consumes. What information consumes is rather obvious: it consumes the attention of its recipients. Hence a wealth of information creates a poverty of attention and a need to allocate that attention efficiently among the overabundance of information sources that might consume it.

Information abundance creates attention scarcity! By 1997 (!) Michael Goldhaber was pushed this further with The Attention Economy and the Net arguing that attention was becoming the new currency of the digital age.

The attention economy brings with it its own kind of wealth, its own class divisions - stars vs. fans - and its own forms of property, all of which make it incompatible with the industrial-money-market based economy it bids fair to replace. Success will come to those who best accommodate to this new reality.

Robert Shiller coined narrative economics, arguing that stories drive economic behavior. I think we are in a new iteration of all of this where the stories aren't just influencing economic activity, they are the economic activity. Attention is a precursor to wealth (in many ways) and speculation drives it.

Succinctly… everything feels like crypto now? Crypto doesn’t represent “real” value (some things in the industry do, but broad brushstrokes), but it synthesizes it through speculation and belief. Vibes, volatility, and mindshare, if you will. We're now living in a system where attention dynamics are the operating system for resource allocation, political decisions, and identity formation.

What Comes Next

This intersection of speculation and attention feels ignored outside of markets. And the question isn't whether we can build better housing or infrastructure - though we desperately need both. The question is whether we can build anything coherent when the resource allocation system rewards attention over everything else.

Because right now, the person who can generate the most compelling speculation about the future gets the most power to create it, regardless of whether they understand the consequences.

There's no neutral outside from which to observe these systems. We're building the tools that are rebuilding us, and it will have consequences across the board. There really isn't an offline anymore, just due to the administration. We're all participants in a cognitive economy that trades in attention, belief, and behavior. Shape the feed, shape the future. What happens when everything becomes an attention-speculation machine?

Next week: Part 2, I’ll attempt to answer that question and walk through what could be built and the generational implications. Subscribe to get that in your inbox!

There’s a really interesting interview with the founder where he gets into risk and he really seems like a person who saw the system as broken and exploited that. It makes sense.

a16z just finished a big rebrand around American Dynamism, which I guess at this point is mostly about turning narrative dominance into capital access.

Hegseth said they had been planning the strikes for months which also feels…. weird

Only 27% of Americans support the Big Beautiful Bill for example.

An interesting example of this is Goldman Sachs is using Polymarket for oil price analysis. Prediction markets built on social media sentiment (vibes, if you will) are becoming inputs to institutional investment decisions. Speculation fills the gap between attention and action. It’s the intermediary step where belief gets leveraged.

Baudrillard on simulation, Debord on spectacle, Girard on mimetic desire, etc. Many!

Yes and I am going to spend the day speculating how it all ties back to Attention Is All You Need. https://arxiv.org/abs/1706.03762 " Attention mechanisms have become an integral part of compelling sequence modeling and transduction models in various tasks, allowing modeling of dependencies without regard to their distance in the input or output sequences"

Jeeze this is well written, I feel like this touch is on some of the things I have been feeling myself. "Succinctly… everything feels like crypto now?" Is actually somewhat close to what I was mentioning with my friends recently.